SlackGPT - My First Satellytes Project

Hi, my name is Jonas.

I study computer science at the technical university of munich, where I am also currently writing my bachelor thesis. To enrich my study life and to gather my first professional work experiences, I decided to search for a tech-job as a working student. After some research and a few interviews I launched at Satellytes.

The Product

I immediately received my first responsibilities in the form of a new project with the working title "SlackGPT." The idea was to breathe new life into the rarely used and dusty OpenAI license. The current solution involves employees visiting openai.com and having their conversations in a separate browser window, causing them to stick to their old habits, by mainly using conventional search engines. Since Slack is Satellytes' main communication tool and is integrated into many different workflows, the idea of incorporating a chatbot that acts as a gateway to OpenAI's services was obvious.

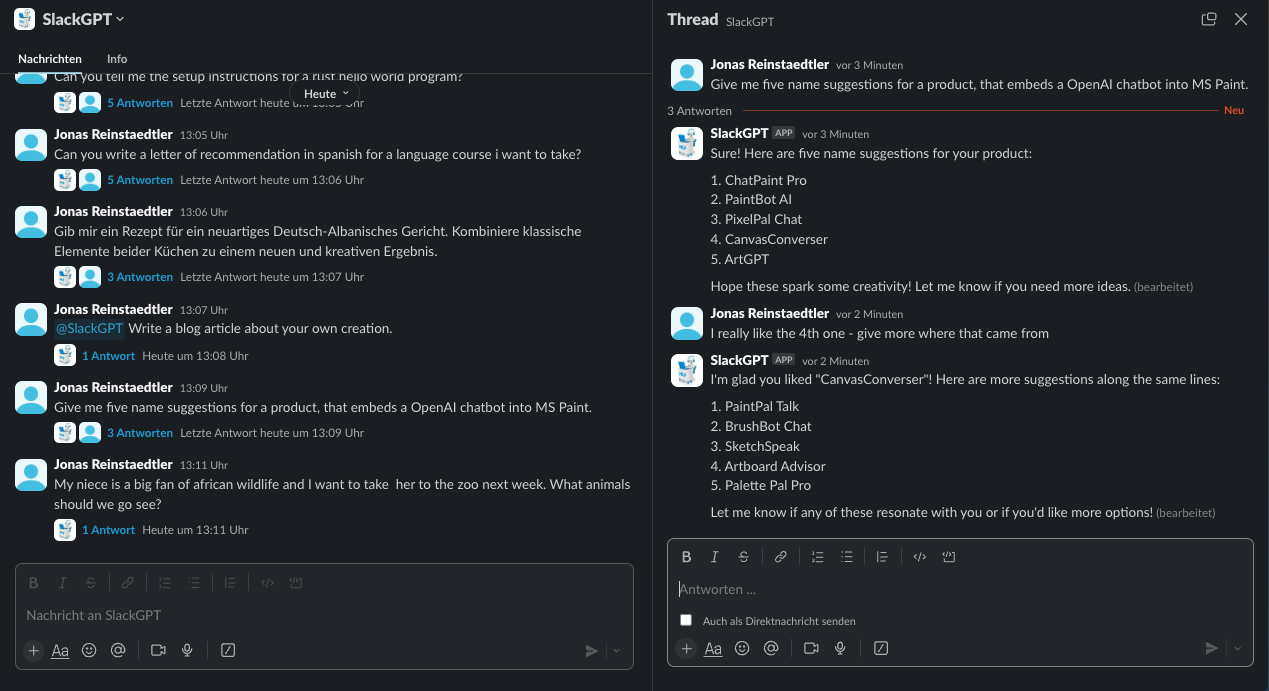

Together with my newly assigned mentor, I began brainstorming the required technologies and potential features. We quickly landed on our first and most important component: context-based conversations. This would allow users to have distinct conversations with SlackGPT, rather than just isolated questions and answers. Slack's thread feature, which enables nested conversations within a single chat, is perfectly suited for this purpose.

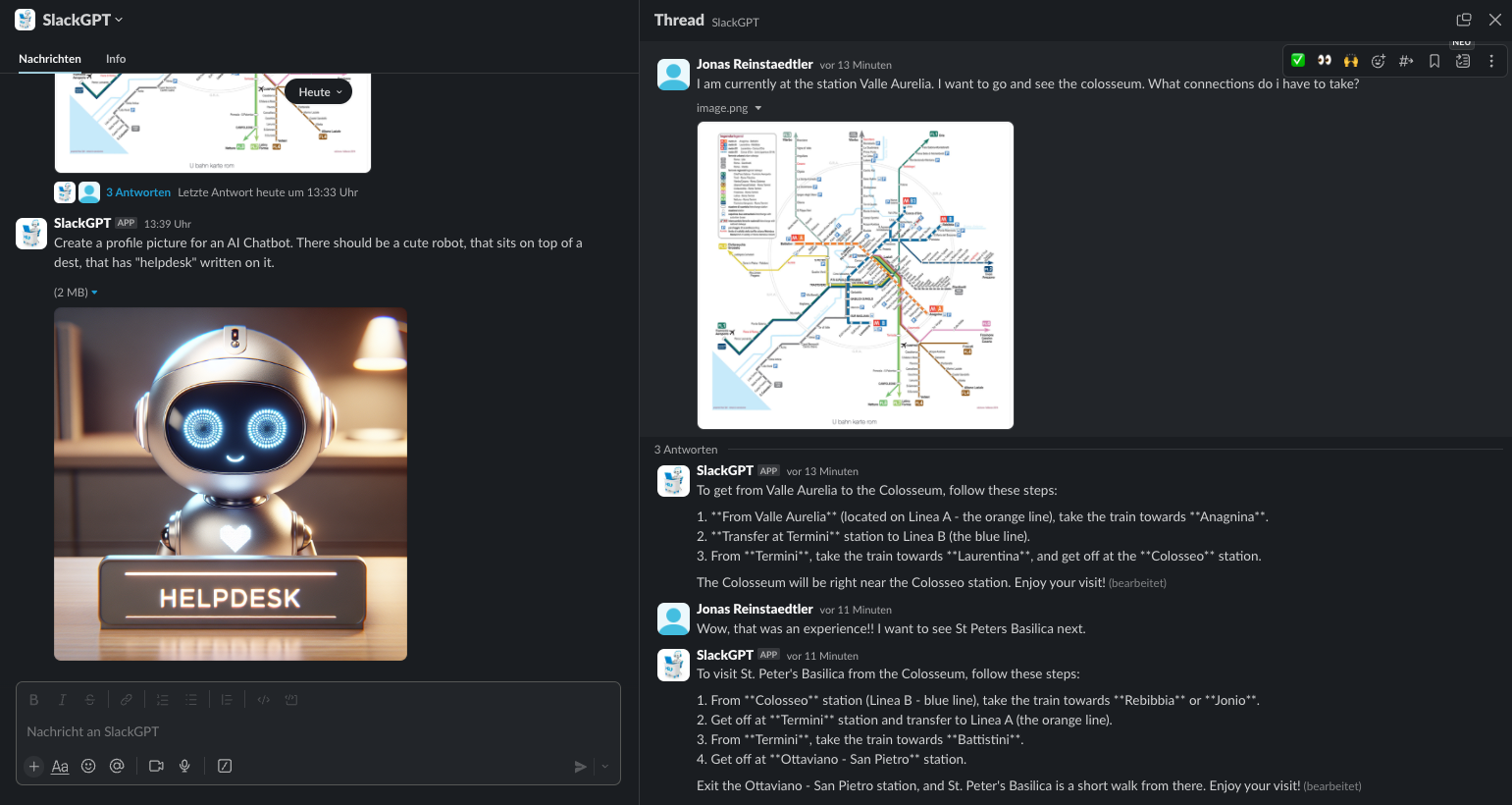

After finishing this first prototype and receiving a lot of positive feedback from our colleagues, we decided that image support should be the next big step.

We want users to be able to simply paste images into their conversation and receive meaningful answers as well as generating images on their own. After a lot of back and forth with both Slacks and OpenAIs APIs, we have achieved our goal. Unfortunately, image generation wasn’t possible in the middle of an ongoing conversation, so it can only be done as a standalone request.

During development, we noticed that interacting with the chatbot didn’t feel very involved. After composing and sending your message, one had to just sit there and wait for an answer. This problem really started to show when conversations went on for a while or the desired answer was particularly detailed or lengthy. In extreme cases up to 10 seconds were spent looking at a static screen, not knowing if the bot had crashed once again.

We solved this by mirroring chatgpt.com’s behaviour and streaming the answers back to the questioner. Since large language models generate their text sequentially, we can provide users with new contents, whenever they are produced by OpenAI and continuously edit existing messages.

We also added playful status notifications in the shape of reaction emojis, that indicate ongoing message processing or programming exceptions and let SlackGPT appear more alive and personal.

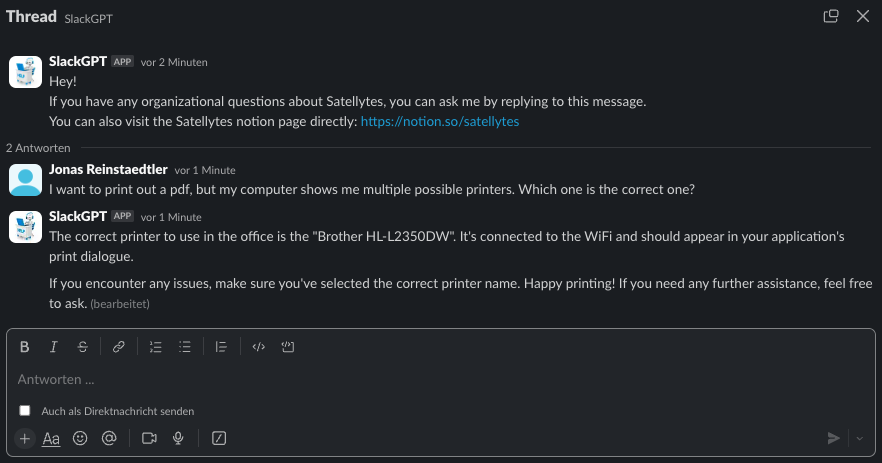

Satellytes also uses Notion, a note taking application, to store information about organisational details and office life. We have implemented a way for users to ask SlackGPT about its contents.

Technical Details

Now the part you have all be waiting for: An outline of the different tools and services we use to power SlackGPT.

The project is implemented in the Java programming language with the help of the Quarkus framework (https://quarkus.io). Quarkus is a Kubernetes-native Java framework tailored for GraalVM and OpenJDK HotSpot, prioritizing fast boot times and low memory usage. Unlike traditional Java frameworks, Quarkus is designed with containerization and cloud-native development in mind, allowing for seamless integration with Kubernetes and serverless environments. Its unique approach incorporates reactive programming and imperative coding styles, providing developers with the flexibility to optimize performance while maintaining ease of use.

To leverage the strengths of Quarkus, we utilize GitHub Actions as our CI/CD pipeline. Whenever new code is pushed to our repository, a custom action is triggered. A GitHub runner then executes a Dagger function (https://dagger.io), that compiles the project into a native Quarkus container. The resulting artifact is stored as a GitHub package. This process doesn't go unnoticed. The Google Cloud Platform detects the new package, retrieves and deploys it in a Google Cloud Run Instance. This solution works serverless, which means the server shuts down after a period of inactivity. When a new request comes in, the server starts in response and can handle the request only after it has booted up. While slower on initial requests, this approach can help reduce costs for services that are seldom used.

Conclusion

Creating the chatbot for Satellytes' Slack server has been a rewarding and insightful experience. The project had just the right scope to be challenging without being overwhelming. Developing an internal tool also helped me become familiar with the company and my colleagues more quickly, while also deepening my knowledge of natural language processing and machine learning. I also learned the importance of effective communication and collaboration.

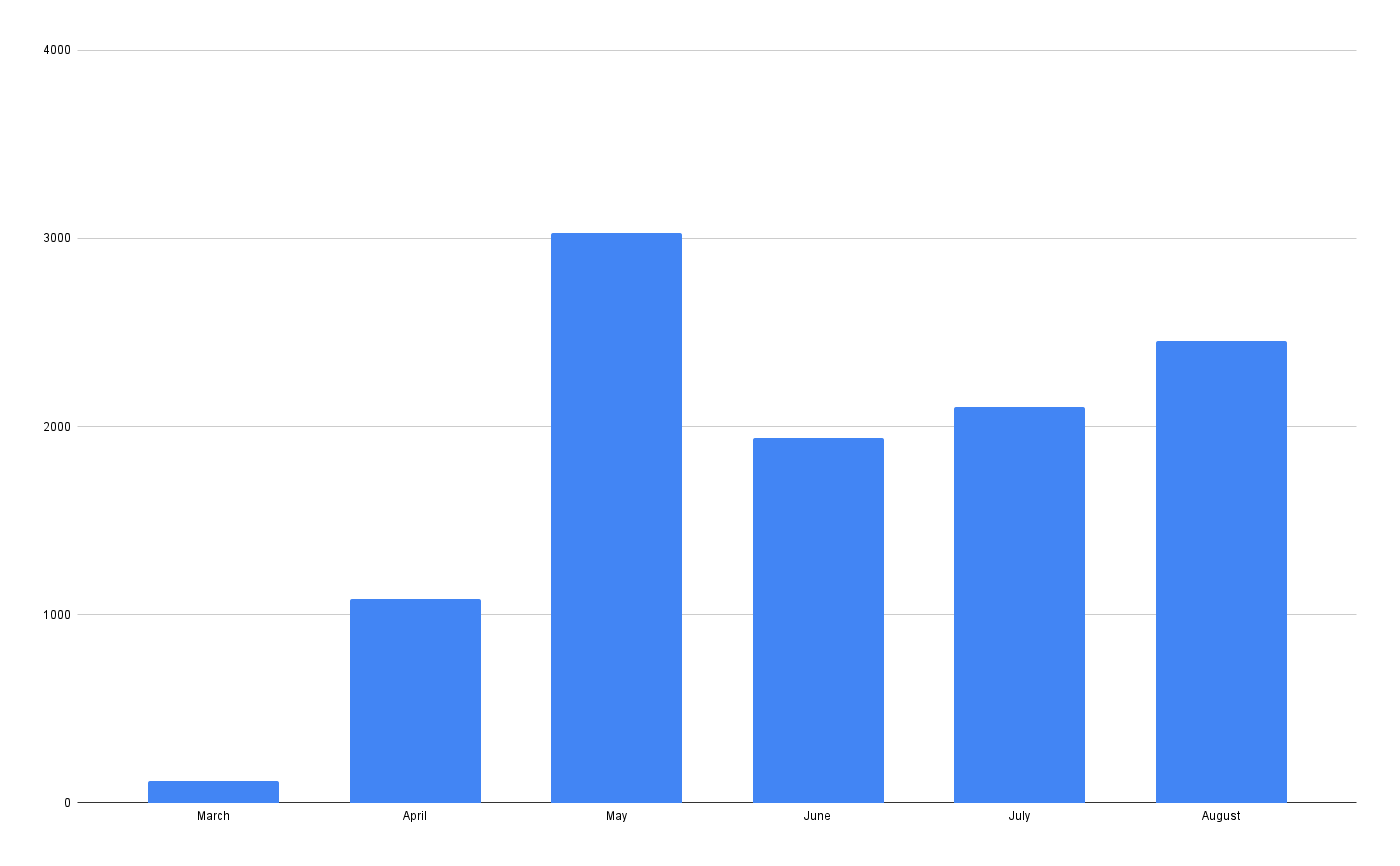

During Development, SlackGPT had gained a few loyal users, but after we completed development, the amount of active users increased even more. The success of the project is confirmed by the increasing amount of exchanged messages - up to 20 requests per user per day. Relying on OpenAIs API alone and integrating it into our existing software toolset instead of relying on their external website was definitely the right choice as it is now used by a significant part of the company. From a financial perspective it is also beneficial as SlackGPT only costs about 30$ per month.

Now you have a general idea of what drives the development of SlackGPT. You know the initial idea, its different features and the technologies that power it. The next step is naturally to try it yourself and https://github.com/satellytes/slackgpt is the place to do so. Feel free to fork and customise it to your liking and tell us what you think about it.